In today’s day and age, data over the internet is complete, like Olympic swimming pools. Using proxies is an essential tool in web scraping and automation. They steer far from malicious organizations and fend off malware while protecting your online activity.

In today’s day and age, data over the internet is complete, like Olympic swimming pools. Using proxies is an essential tool in web scraping and automation. They steer far from malicious organizations and fend off malware while protecting your online activity.

What happens when you need a multitude of proxies? This is where proxy pools play the main character.

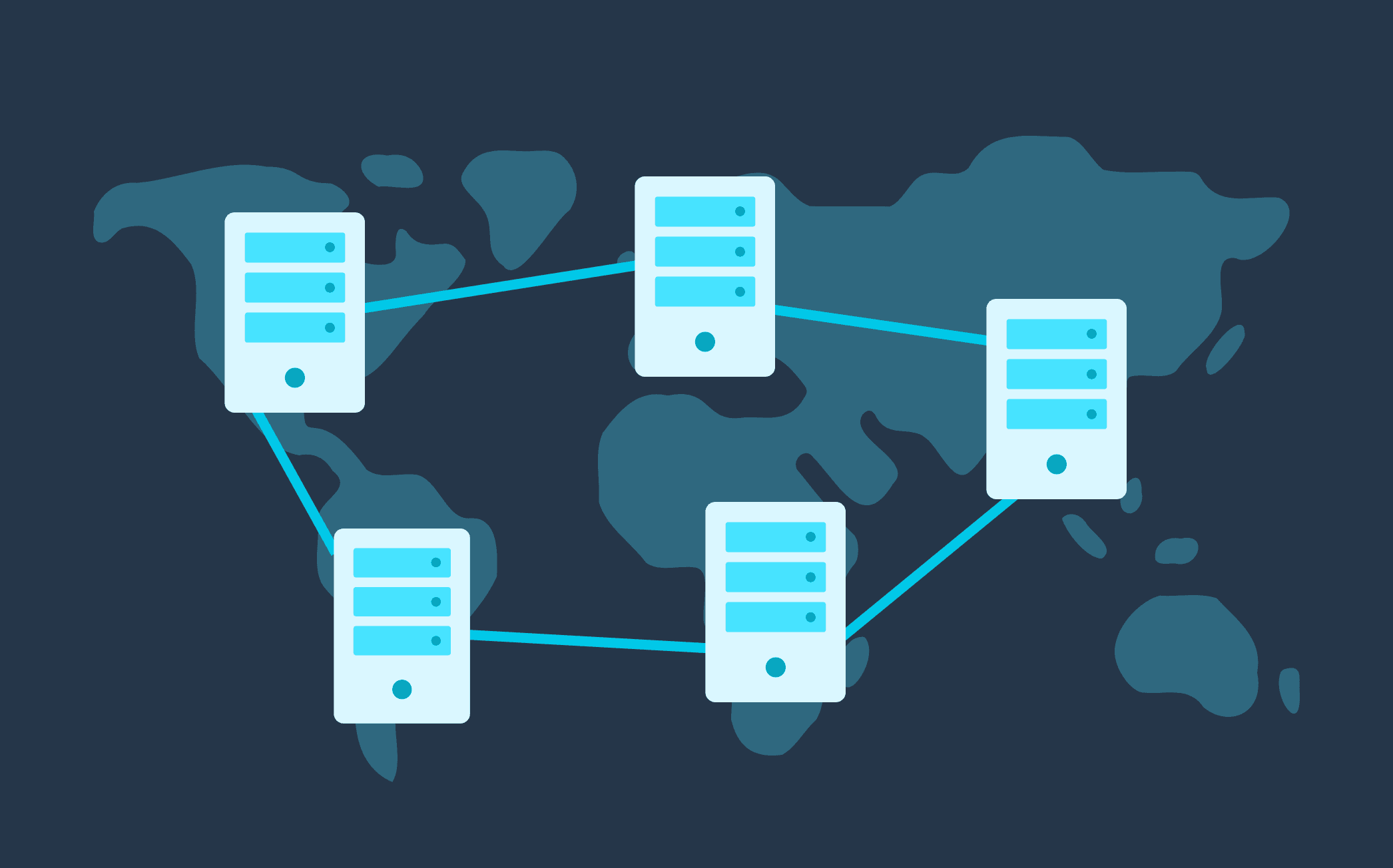

First, we must ask what proxy pools are and how they change how we interact with the internet. Proxy pools are a reservoir of multiple proxy servers you can purchase or access. Users can dynamically leverage proxy pools to distribute their web requests over numerous IP addresses.

How? That’s precisely what we’ll go over. Let’s take a look.

What is a Proxy Pool?

Think of proxies as a tool to mask your IP address; now, picture a proxy pool being a whole toolset. In the realm of web scraping and automation, proxy pools provide a crucial lifeline.

-

The Proxy Server A proxy server is like a middleman. It sits between your device and the website. Instead of displaying your IP address on the website, the proxy server steps in as the legal guardian and does this with its IP address. This effectively masks your IP address, covering your digital footprint for you.

-

The Concept of a Proxy Pool While a single proxy will do its job in securing your privacy online, a proxy pool increases this by a considerable amount. Imagine looking for a person in a room with just five people. Now, do the same, but with 500, it’s a huge difference, right?

Proxy pools give you access to a vast selection of proxies, allowing you to seamlessly interchange between IP addresses yet remain unmarked by websites.

How Proxy Pools Work

Now that you know what a Proxy Pool is, it’s time we discuss how they work. Understanding each step is crucial to making the right choice for you. Let’s peel back each layer and take a look at how everything works.

- The Pool Itself A proxy pool is not just a collection but an arsenal of identities—each proxy holding a distinctive IP address.

Reputable proxy providers dedicate resources to these large and diverse pools of thousands of IP addresses simultaneously. They offer various server locations to give you unparalleled flexibility.

-

Making a Request Your interactions with a proxy pool usually begin by sending a request to the target website. Your selected provider makes this process seamless, giving you the impression you’re browsing without interruptions.

-

Behind the Scenes It’s time to bring this all together. Let’s visualize your web request.

-

Proxy Selection: Upon sending your request, your pool provider’s system will automatically select an appropriate proxy server. Your provider may consider multiple factors like your speed, geographical location, or any other specific requirements.

-

Relay and Response: Your proxy plays dress up in a high-tech costume. Your request gets forwarded through the selected proxy server, swapping your IP address for the IP address associated with the proxy server.

The target website carefully examines your request and views it as coming from a proxy server. This hides your identity, and any data transferred from there on out yields through the proxy.

Managing IP Quality in Proxy Pools

Proxy providers must maintain their IPs and their quality. This lengthy process has many steps, from purchasing to configuring the proxies on a server via a script to monitoring and keeping a reliable connection.

The provider configures their script with port numbers, authentication methods, and connection limits. This is to ensure they meet the customer’s product needs.

Quality proxy providers that manage their pools constantly monitor the health and stability of their systems. They will periodically replace IPs or servers, which can come across as red flags. The respecting organization will take care of performance reduction or abused IPs.

Another way providers ensure Proxy Pool quality is by constantly scaling their pool size, analyzing the current market, and adjusting accordingly.

The policy for rotation of Abused or degraded proxies is implemented. If a proxy is suspected to have been abused, it will automatically be rotated or replaced by the provider. This is to ensure a healthy reputation for the pool.

Proxy Pools for Web Scraping and Automation

Using a proxy pool for web scraping and automation can bring various advantages that incentivize purchasing. Here’s how a premium proxy tool can help:

- Web Scraping Proxy pools allow the rotation of proxies. This decreases your odds of being spotted by the website anti-measures. Web scraping from a single IP address will quickly lead to being blacklisted and end up being blocked by websites.

Faster data collection is also a key element. Proxy pools automatically distribute requests across many proxies, enabling more immediate data collection.

- Automation Automation processes benefit from the use of proxy pools. Repetitive tasks, such as form submissions or site monitoring, can be done without triggering anti-bot mechanisms.

If your provider’s proxy server experiences an outage or malfunction, your automated tasks can switch to a different proxy in the pool. This ensures that your task won’t be suddenly stopped.

Considerations and Limitations of Proxy Pools

Using proxy pools is usually beneficial for both individuals and organizations. You do have to be aware of the challenges and considerations you might face.

Considerations:

- Technical Complexity Implementing and managing the use of proxy pools is very technically demanding. This requires technical know-how, integration, and management.

Users should also be aware of complications when using proxy pools. These include IP block failures and rotating proxies.

- Malicious Providers How well the proxy pool will perform depends on how the provider takes care of their proxy pool. Buying from reputable and quality providers will drastically improve your experience.

Ensure your provider sources from reputable sources to avoid security risks or malicious proxies.

- TOS Breakage Ensure you aren’t breaking your target website’s TOS when sending a request. Check their terms of use beforehand so you don’t have any legal issues.

Limitations

-

Geographical Limitations Proxy pools might not be in the region you are looking for. You need to find geographically related proxies if the content you’re trying to access is region-locked.

-

Rotation of Pools Often, high-quality providers will rotate their pools to ensure anonymity and distribute traffic. Although this might be a good thing for some, for others, it will disrupt their browsing experience.

Conclusion

We’ve dug deep into what Proxy Pools are and how they can benefit one. Protecting your online presence and web scraping is often overlooked. If you’ve read through this, you now understand how proxy pools function and their benefits.

If a proxy pool has sparked your eye, ensure you will use a reliable and top-notch provider. Our recommendation is SpeedProxies.